Android 16 and Gemini aren't separate initiatives. One is the operating system, the other is the intelligence layer. Together, they show how Google is rethinking mobile interaction by fusing system architecture with AI capability. The traditional lines between OS, apps, and cloud services are shifting. Gemini isn’t just baked into one app. It’s threaded into the OS, available across screens, apps, and user inputs. This shift isn’t just cosmetic. It introduces new tools, constraints, and design questions for both users and developers.

The Gemini Layer: Not Just Assistant 2.0

Gemini is designed to be ambient and multimodal. In Android 16, it can be triggered through voice, system UI, or even screen context. It understands what’s on the screen, draws on app metadata, and builds responses shaped by prior activity.

Holding the power button no longer just brings up Assistant. Gemini now parses the foreground app, recent actions, and available metadata. It can summarize a long message thread or describe images using OCR and vision models. These aren't scripted features. They rely on prompt-based behavior and token-efficient inputs that blend instruction-tuned language with lightweight memory from system signals.

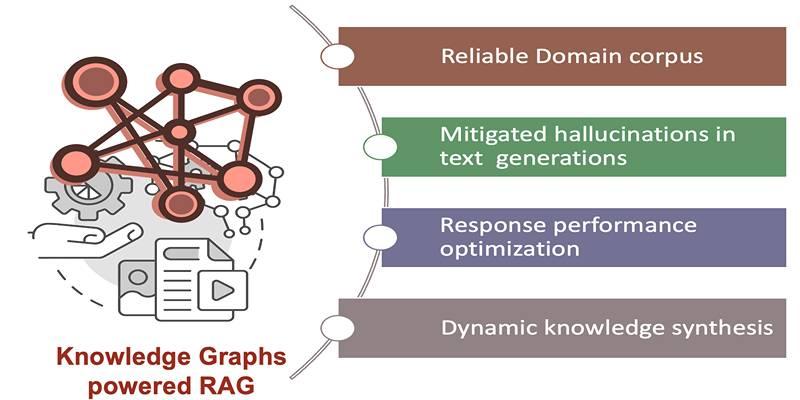

But this flexibility introduces ambiguity. Gemini isn’t reading your mind. If the screen context is noisy — say, multiple chat windows or overlapping elements — the model’s guess may be off. These aren't hallucinations in the usual sense, but context mismatches. Developers have to sanitize inputs and define hard boundaries to limit drift. Prompt scaffolding becomes a form of UI design.

Android as an AI Host, Not Just an OS

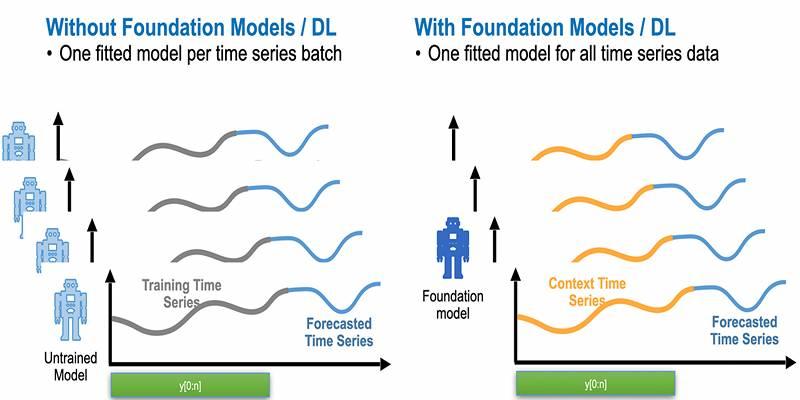

Android 16 treats AI tasks like native workloads. The AICore service handles this, sitting between apps and Gemini APIs. It manages inference routing — some queries run on-device, others are sent to Gemini in the cloud. Developers don’t pick one or the other. AICore decides based on model size, privacy needs, and hardware availability.

On Pixel 9 and newer, Gemini Nano models run locally for low-latency tasks like summarization or intent recognition. These are distilled versions, quantized for memory and power constraints. The trade-off is depth. Nano can’t handle complex chains of reasoning. It works best for clear tasks with tight inputs. Anything broader gets sent to the cloud.

This hybrid model isn’t just about performance. It supports localized data handling. An app can classify or summarize user content without ever uploading it. Only filtered context flows to Gemini Pro in the cloud. This helps address privacy rules in regions like the EU or India without removing features outright.

There are limits. Local inference has strict memory ceilings. If multiple apps request AI tasks, AICore can delay or reject lower-priority jobs. Developers are expected to register tasks in advance and specify resource profiles. That makes AI feel like part of the OS scheduler, not a bolt-on library.

Multimodal Capabilities in Practical Use

Gemini's multimodal foundation shows up in real user flows. Google Photos can now handle contextual queries across image sets. A user might ask, "Show the beach trip with the striped umbrella," and Gemini filters based on object detection, timestamps, and location data. This is more than a metadata search. It fuses language and vision in a lightweight retrieval pipeline that performs with relatively low latency even on mid-tier hardware.

In Gmail, reply suggestions now reflect emotional tone and inferred urgency. Gemini analyzes the conversation thread, gauges prior interactions, and shapes tone accordingly. But model output is gated by reliability thresholds. If confidence is low, Gemini remains silent. Google’s internal tests found that low-threshold replies increased user correction rates. So silence is sometimes safer and preferred in ambiguous cases where tone could be misinterpreted, or context is partial.

App developers have access to similar tools through Gemini APIs. They can register content (text, images, or even audio) and submit queries with schema hints. This helps Gemini scope the task. Open-ended prompts on raw input tend to fall apart. Latency spikes and output quality drops. Structured queries with task-specific prompts produce better outcomes — shorter inference time, more consistent answers, and more predictable integration paths.

Developer Shifts and Ecosystem Implications

Gemini changes the Android development workflow. Android Studio now includes Gemini-backed suggestions, so devs writing prompts or UI elements are using the same models that power user-facing features. This dual exposure makes debugging easier, but it introduces new skill requirements.

Prompt testing is now part of QA. Developers are encouraged to simulate model calls with variant inputs, then log and evaluate consistency. Output drift between Gemini model versions is tracked in system logs. This helps teams spot regressions early — not in code, but in model behavior.

Inference cost is another factor. Cloud queries are subsidized, but not infinite. Apps sending large context windows or frequent requests may hit usage ceilings. Google suggests batching inputs or compressing verbose data. Gemini's tokenizer is designed to retain semantic meaning even in compressed formats, but prompt length still affects both performance and response time.

Developers also have to plan for change. Gemini’s system prompts and tuning parameters update frequently. What works well this month might drift next month. Apps that rely heavily on exact output formats need regression buffers and fallback logic. This isn't unique to Android, but for many mobile teams, it's new territory.

Conclusion

Android 16 with Gemini marks a shift in platform design. The OS is no longer just managing apps and hardware. It’s actively shaping context and routing tasks to models. For users, this means interactions that feel faster and more responsive. For developers, it means adapting to systems where outputs aren't always deterministic. The integration is deep, but not rigid. Gemini doesn’t overwrite Android norms. It adds a layer of reasoning where it makes sense and stays silent when it doesn’t. The tools are here. The challenge is building around them without treating the model like magic.