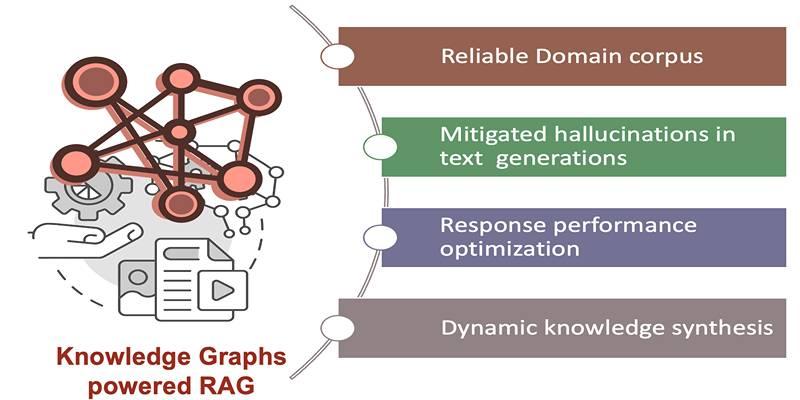

A knowledge graph is a structured map of entities, their attributes, and how they connect. Retrieval augmented generation, or RAG, mixes a retriever that gathers facts with a generator that writes the answer. Generative models can read documents and suggest candidate facts as triples. Analytical models can check those candidates, score them, and decide which should enter the graph. When both work together, you get answers that are grounded, readable, and easier to maintain.

Data Sources And Schema Setup

Pick sources that match the questions your RAG will face. Policy docs, product manuals, reference pages, and curated tables are great when they are current and clear. Before extraction, write a compact schema.

List core entities, name relations, and define attributes with simple types. Keep labels human-friendly so reviewers can work fast. Add a short synonym map so small spelling shifts do not create duplicate nodes. A tidy schema with stable names keeps the graph from drifting over time.

Generative Extraction Pipeline

Split content into chunks that respect sentences and headings. Prompt a generative model to propose subject, predicate, object triples with a confidence score and an evidence span. Ask for the exact character offsets that back each claim.

If the source does not state a fact, the output should say so. That single rule cuts hallucinations and makes review easier. Store all candidates in a staging area instead of writing straight into the live graph. A buffer gives you room to score, filter, and roll back when needed.

Analytical Checks And Scoring

Run named entity recognition to see if the subjects and objects look valid. Use a relation classifier to match predicate phrases to your canonical relation names. Compare strings for overlap and also check embedding similarity so paraphrases map to the same link type.

Track source freshness for pages that change often, like pricing or policy notes. Score each candidate with a blend of evidence quality, relation fit, and source trust. Favor precision at the top so the first edges you accept are correct. Expand recall later once the base is clean.

Normalization, Merging, And IDs

Before insertion, normalize casing, strip noise, and apply the synonym map. Use vector similarity to catch near duplicates. When the distance between two nodes is tiny, merge them and record aliases so you do not lose searchability.

Keep stable IDs that never change, even when labels do for relationships, store direction, the typed predicate, and full provenance. Save the source link or document ID, the timestamp, the model version, the prompt hash, and the evidence offsets. Provenance makes audits fast and prevents long arguments during review.

Wiring The Graph Into RAG

The graph improves retrieval and writing. For retrieval, detect entities in the user query, then walk short paths in the graph to find related nodes and documents. Blend those graph results with vector search on passages.

For generations, pass a tight context: top passages, a small list of high confidence triples, and citations. Ask the model to cite any claim that comes from the graph or the passages. If the answer contains a claim that lacks context support, flag it for a post answer check. That flag feeds back into your review queue.

Quality Testing And Human Review

Build a small, sharp test set of questions with known answers and a few distractors. Score answer correctness, citation coverage, and refusal behavior when the graph lacks a fact. Run a blind human review weekly.

Reviewers should see the question, the answer, the cited passages, and the supporting triples. Ask them to mark wrong links, stale values, and sketchy merges. Track agreement between reviewers. When they often disagree, your schema or labeling rules likely need clearer guidance.

Ranking Signals For Better Retrieval

Good graphs still need a strong ranking. Train a light ranker that values what helps answer, not vanity metrics. Mix entity match strength, relation importance, path length, and evidence quality. Reward passages that contain the exact evidence spans used during extraction.

Discount stale sources for time sensitive queries. Track NDCG at three and five because users rarely go deep. Watch the mean reciprocal rank to see how often a helpful item appears early. Small, focused metrics help you tune without turning analysis into a maze.

Freshness, Drift, And Guardrails

Schedule rechecks by source type. Some pages change monthly, others shift daily. When new extractions conflict with stable values from trusted sources, hold them in the buffer for review instead of flipping the graph back and forth.

Add drift alerts for sharp moves in embedding clusters or relation distributions. Spikes can be real, yet they always deserve a look. Keep a small allowlist of high trust sources that can update specific fields with lighter checks. All other sources should pass the full review path.

Cost, Latency, And Practical Choices

Chunk size shapes both quality and spend. Larger chunks reduce calls but blur evidence. Smaller chunks give precise spans but cost more. Pick a middle ground and tune with data. Cache extraction results using a content hash, so unchanged pages do not trigger new runs.

Batch requests where your platform allows it. Measure time from question to answer as a single number. Users feel total delay, not where it came from. If latency grows, trim context windows, tighten graph walks, and cache frequent subgraphs.

Monitoring That Tells A Clear Story

Dashboards should speak plain language and link to examples. Track node and edge counts, merge rates, candidate acceptance rates, and the share of edges with strong provenance. Add weekly answer accuracy, citation coverage, and the rate of safe refusals.

When a metric dips, include clickable cases so someone can jump from the number to the evidence. A short weekly note that pairs numbers with two or three real examples helps teams act faster than a page full of unlabeled charts.

Conclusion

Generative models read and propose. Analytical models test and decide. A clean schema, careful merging, and full provenance keep the graph steady. Hybrid retrieval blends graph paths with vector search so the right context reaches the generator. Human review, focused metrics, and readable dashboards close the loop. With these habits in place, a RAG system can produce answers that stay grounded, cite their sources, and hold up as content changes.