The push for autonomous AI agents in dynamic real-world environments has revealed a fundamental weakness in traditional Large Language Model (LLM) architectures. Agents relying on simple "think-then-act" methods, like ReAct, often fail when facing partially observable, rapidly changing scenarios requiring extensive memory. This vulnerability causes critical issues such as model drift, where performance degrades as the agent fails to adapt, and catastrophic planning failures when tasks deviate from initial steps.

A new paradigm is emerging to address these limitations. It shifts focus from maximizing the LLM's single-turn reasoning to constructing a persistent, self-correcting internal world model. This novel cognitive architecture promises agents capable of navigating messy, unpredictable reality with significantly improved efficiency and reliability.

Moving Beyond Simple Prompt Chaining

The common approach to agent design, which we can call the shallow reasoning loop, places the LLM at the center of every single decision. An agent receives a goal, generates an action plan using an intricate prompt chain, executes the first step, observes the result, and then feeds the entire history back to the model for the next decision. This process is inherently wasteful.

For a complex task involving querying a CRM, drafting an email, calling an external API, and updating a database, every single step forces the model to re-read and re-digest a growing token-length history. The long context window required for continuous state updates inflates inference cost and introduces significant latency.

More critically, this approach suffers from brittleness. If the external API call fails or returns an unexpected schema, the agent’s reasoning often stalls or enters an unrecoverable loop because the core LLM is poor at genuine self-diagnosis and large-scale plan repair outside of fixed, prompt-engineered instructions. The new alternative approach fundamentally re-architects the internal system. Instead of treating the LLM as the sole reasoning engine, it becomes a knowledge distiller and meta-planner that manages a structured, external state.

The Role of Externalized Cognitive Architecture

The new method, often referred to as a Cognitive Architecture or Model-Based Reflex Agent with Memory, separates the core functions of the agent from the raw LLM calls. This architecture is defined by three key external components: an Episodic Memory, a Procedural Library, and a Reflex/Heuristic Engine. The LLM’s role is relegated to two main tasks: interpreting the high-level user goal into a discrete plan and synthesizing the stored information from the external components when a deep-reasoning step or self-correction is needed.

The Episodic Memory, typically implemented with a vector database, stores past observations, decisions, and outcomes—not just raw conversational history, but structured summaries of what happened, why it happened, and the resulting change in the environment. This memory allows the agent to recall similar past situations efficiently, addressing the context retention challenge that plagues simple LLM agents when dealing with long-running tasks.

The Procedural Library contains verifiable, deterministic code functions (e.g., Python scripts, SQL queries, API wrappers) that handle non-generative tasks. When the agent needs to check inventory, it executes a pre-vetted function rather than asking the LLM to generate the API call string, which is prone to format-related hallucinations and security vulnerabilities. This dramatically increases reliability and reduces non-productive token use.

Leveraging Real-Time Contextual Grounding

A major advantage of the Cognitive Architecture is its capacity for real-time contextual grounding, mitigating both hallucination and the token burn problem. In a traditional agent, the entire relevant document must be retrieved using Retrieval-Augmented Generation (RAG) and loaded into the LLM's context window. This can easily involve thousands of tokens for a complex query.

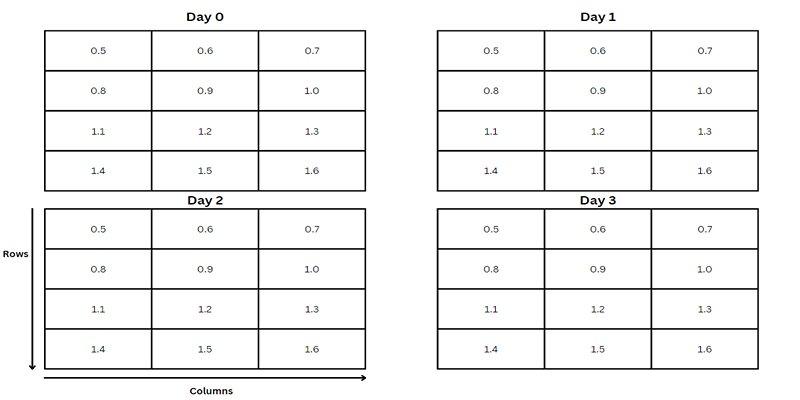

The new approach shifts this burden. The Reflex/Heuristic Engine maintains a simplified, continuously updated world state—a set of key-value pairs representing the most salient, up-to-the-minute facts. For instance, in an air traffic control simulation agent, the world state would contain the altitude and heading of every plane in the agent’s sector, updated from sensor inputs every second. Simple, critical decisions, like a conflict alert, are handled by low-latency, deterministic rules embedded in the Reflex Engine, bypassing the LLM completely.

The LLM is only invoked when a new, non-routine event occurs, requiring the agent to synthesize a complex new strategy. Even then, the system passes the model only the current, high-priority world state and a concise summary from the Episodic Memory, keeping the overall token count low. This hierarchical decision-making is key to achieving a sub-second latency for critical actions while reserving the high-cost, high-latency LLM for genuine planning and reasoning.

By externalizing most of the state and deterministic logic, developers can employ a fine-tuning trade-off, optimizing smaller, domain-specific models for the Reflex Engine, which substantially cuts LLM inference costs and makes these agents practical for high-volume operational tasks.

From Research to Deployment: Practical Considerations

Implementing this model-based agent requires expertise in managing vector database infrastructure for memory and designing a clean, low-coupling interface between the LLM and the Procedural Library tools. A crucial practical step is establishing a robust observability and debugging framework. Unlike a sequential LLM call, where errors are traced in a single chain, debugging an agent with a cognitive architecture means tracing non-deterministic LLM-driven actions alongside deterministic code execution and asynchronous memory updates.

Deployment also introduces complexity around data bias and model drift in the memory system. As the agent interacts with reality, its Episodic Memory is continuously updated. If the reality it interacts with is biased, the agent’s learned heuristics may skew negatively. Regular human-in-the-loop review of the memory traces and a scheduled memory consolidation process are necessary maintenance tasks. Ultimately, this externalized architecture offers a pathway to scaling autonomous agents beyond brittle demos into robust, cost-effective enterprise systems.

Conclusion

The transition to an externalized cognitive architecture represents a pragmatic evolution, leveraging the LLM's strengths in creative reasoning while addressing its weaknesses in memory, cost, and deterministic execution. By offloading state management to external components—Episodic Memory, a Procedural Library, and a Reflex Engine—agents achieve greater stability and cost efficiency. This approach enables hierarchical decision-making, reserving the powerful LLM for novel planning. Consequently, these agents exhibit less hallucination, are more robust to environmental change, and are viable for high-volume enterprise deployment where predictable reliability is essential.