In many organizations, the push to adopt AI starts with a focus on efficiency—automating tasks, reducing overhead, optimizing workflows. But one of the more transformative applications is subtler: using AI to understand and grow human capabilities at scale. Becoming a skills-focused organization means moving away from static roles and formal credentials toward a more dynamic view of what people can do, where they’re growing, and how their abilities align with business needs. This shift depends on more than technology. It requires treating skills as living data, integrating AI into core systems, and building feedback loops between humans and machines.

Infer Skills from Actual Work, Not Just Labels

Job titles, resumes, and learning completions provide limited insight into real ability. A software engineer may be called a "full-stack developer," but what they actually do daily might lean heavily toward frontend work. Course completions indicate exposure, not proficiency. AI can help move beyond labels by analyzing how people engage with real work.

This starts with sourcing data from systems of record: code repositories, ticketing tools, design platforms, product specs, and documentation. Natural language processing and embeddings can analyze this data to detect patterns and extract skill signals. For instance, if someone has contributed consistently to Kubernetes configurations over several quarters, that suggests real fluency—even if it's not listed on their profile.

Not all data is equally useful. Signal quality varies based on how structured the data is and how closely it's tied to output. Slack messages are noisy. Pull requests, design proposals, or QA test plans offer better structure for analysis. Context is also crucial. A junior contributor's comments in review threads shouldn’t be treated the same as authored production code.

Model selection matters here. Transformer models fine-tuned on domain-specific corpora will generate better extractions. A generic LLM might recognize Python as a skill, but a smaller, task-specific model trained on engineering logs will better understand depth—such as whether someone is using Pandas for data wrangling or building custom APIs.

Inference pipelines also need to factor in latency and cost. Real-time skill detection is attractive but expensive. Batch processing weekly or monthly might be a better tradeoff for accuracy and efficiency. Many teams start with daily or weekly pipelines and evolve toward real-time only where the business needs justify it.

Link Skills to Work, Not Just Learning

Once skills are detected, the default move is to recommend training. But AI systems that only feed learning pathways miss a larger opportunity—linking skill signals to real work. Skills grow through application. AI can help connect capability data to upcoming projects, internal roles, mentoring needs, and cross-functional requests.

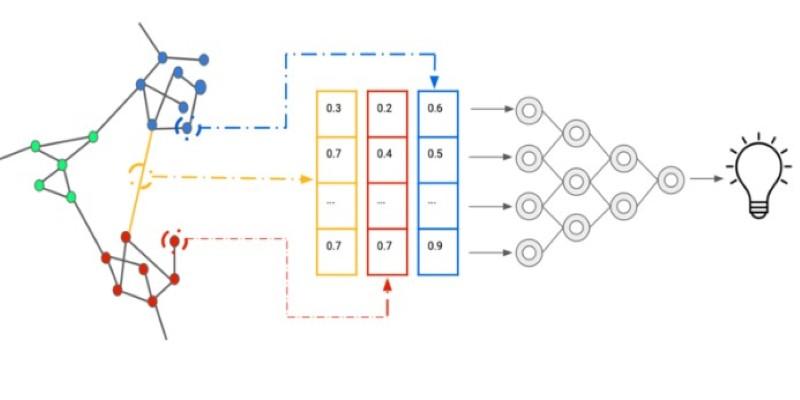

Embedding-based matching can make these connections more flexible. Instead of relying on hard-coded tags, AI can translate both opportunity descriptions and skill profiles into dense vectors. This allows for more nuanced comparisons. For instance, someone showing increased depth in security-focused React development could be a match for an initiative involving OAuth token management, without either being manually tagged.

Embedding models drift. If not retrained regularly on new project data and evolving terminology, they start returning stale or irrelevant matches. A model trained in early 2023 might not recognize skill growth in RAG (retrieval augmented generation) because it wasn’t widely referenced then. Keeping these models fresh is critical.

Accuracy improves with feedback loops. Recommendations should be scored or reviewed, not just acted on. A low acceptance rate signals poor matching logic or stale data. Humans need to validate matches not only to avoid bad outcomes but to improve the model’s internal representations. Feedback should influence both the retrieval function and any classification thresholds.

Don’t overlook friction points in integration. For skill-to-work recommendations to matter, they need to show up where planning and staffing decisions happen. That means surfacing them inside workforce planning tools, project management dashboards, or resource allocation workflows. Standalone systems, no matter how smart, will get ignored.

Evolve Skill Models Over Time—Treat Them Like Products

A major reason skill systems become irrelevant is that they freeze. Skill taxonomies hardcoded in spreadsheets or platforms reflect the world as it was when they were created. AI enables a different approach: treating skills as a product that’s constantly updated, refined, and shaped by usage.

This involves maintaining a dynamic skill graph. Instead of lists or hierarchies, graphs let you map relationships: which skills tend to appear together, which ones lead to others, which are trending upward. For example, involvement in LLMOps projects may be linked to adjacent skills like prompt engineering, model evaluation, or LangChain usage. These aren't always captured in traditional catalogs.

Graphs must be curated. AI can propose new nodes and edges based on frequency or proximity in embeddings, but human domain experts should validate before incorporating. Otherwise, you'll end up with false relationships that pollute the signal. For instance, frequent mentions of “Docker” near “logging” don’t necessarily mean they belong in the same skill cluster.

Prioritization matters. Not every detected skill is valuable. Some might be obsolete, niche, or irrelevant to your business context. Weighting can help—based on usage frequency in high-impact projects, business priority, or scarcity within the workforce. These weights can be dynamically adjusted through a mix of usage logs, business strategy shifts, and manager input.

Skill models also need a versioning approach. As with software, changes to the model or the underlying graph should be documented, tested, and communicated. If weights shift or nodes are removed, downstream systems that rely on the model—like learning platforms or mobility engines—need to adjust. Otherwise, mismatches and confusion follow.

Finally, treat model observability seriously. Logs, audits, and dashboards should track how often skill inference runs, how fresh the inputs are, what the average match confidence looks like, and where drop-offs occur in downstream usage. This lets you spot degradation before it impacts business decisions.

Conclusion

AI reveals skills that static systems miss, but it must be used with real data, tied to actual work, and built to evolve. The aim isn’t just skill detection—it’s linking skills to business impact, growth, and opportunity. Organizations that treat skills as dynamic, living systems gain clarity, adaptability, and better workforce decisions. The result isn’t just improved insights—it’s a workforce that understands its strengths and sees where to grow.