It's not hard to understand why many people feel stuck when dealing with insurance. Filing a claim often means bracing for delays, poor communication, confusing paperwork, and long resolution times. Whether it's auto, health, or property coverage, the experience can be draining even for the most patient customers. Complaints usually follow a pattern: people struggle to get answers; they're passed from one agent to another, and often feel like they're being worn down instead of helped. While insurers have started to digitize some processes, these fixes don’t always reach the core issues. That’s where artificial intelligence might make a difference.

Claims Processing Needs More Than Just Automation

Most insurance companies have already automated portions of their claims workflows. Optical character recognition (OCR) can read forms. Rule-based engines can flag missing information. But automation alone doesn’t account for context, unusual scenarios, or complex disputes. That’s why claims still bottleneck, especially when human adjusters have to step in.

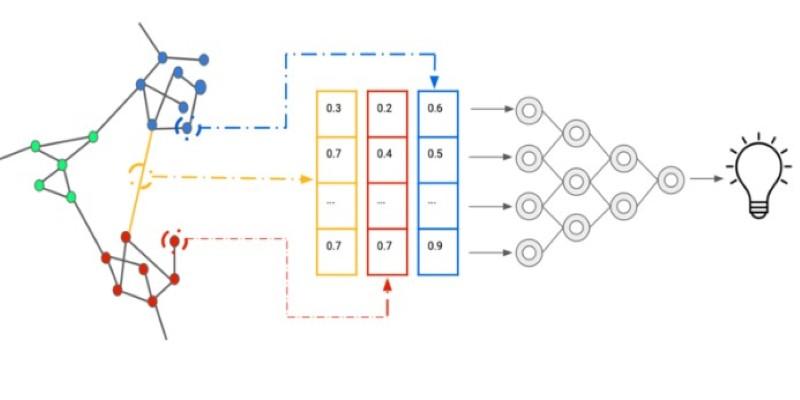

AI introduces a different layer of problem-solving. Large language models can parse policy documents, compare claim details against terms, and highlight inconsistencies that merit closer review. Computer vision models can analyze photos of damage and assess repair needs with far more consistency than a rotating cast of adjusters. These systems don’t replace human judgment outright—but they can surface the right data faster, reduce unnecessary delays, and help frontline agents spend more time resolving real issues instead of bouncing between screens.

This becomes especially useful when a claim falls outside the typical patterns. AI can use historical data to identify similar edge cases and how they were resolved. Instead of escalating every anomaly, the system can suggest next steps that align with past decisions, improving both speed and consistency.

Customer Service Still Lags—AI Might Actually Fix It

Getting help from an insurance company is often a test of endurance. Phone queues, generic responses, and clunky chatbots are the norm. Even when live agents are available, they may not have access to your full history or the context needed to help quickly.

With the right implementation, AI can create a more coherent support experience. Large models fine-tuned on internal support transcripts can answer questions with nuance, not just scripted lines. They can also summarize claim status across multiple internal systems, allowing a single point of contact to provide accurate updates.

Another shift is in tone. Traditional bots often misunderstand what people are asking, especially when emotion or urgency is involved. AI models trained on real customer language are better at interpreting these cues. They can escalate serious issues appropriately and respond in ways that match the mood of the conversation—calmly, without robotic repetition.

However, the benefit hinges on data integration. If a model can’t see across systems—policy info, past claims, call logs—then it’s just guessing. The real gains come when these tools are connected to the insurer’s back-end in meaningful ways. That takes deliberate engineering and internal buy-in, not just a chatbot layered on top.

Preventing Model Drift and Bias in High-Stakes Decisions

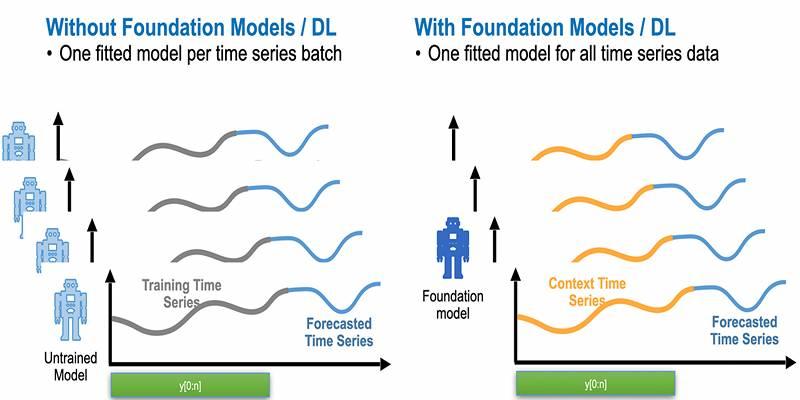

Insurance is a regulated industry. That adds pressure to ensure AI systems behave predictably and fairly. One real concern is model drift—when the accuracy of a model declines over time because the data it was trained on no longer reflects current conditions. For example, if repair costs shift regionally or claim patterns change due to new weather events, an outdated model might underpredict payouts.

This isn’t just a technical issue. It becomes a trust issue if customers start seeing inconsistent or incorrect decisions. To address this, insurers need to establish retraining schedules and performance monitoring pipelines. The system must track accuracy across different segments—geography, claim type, customer profile—to catch signs of drift early.

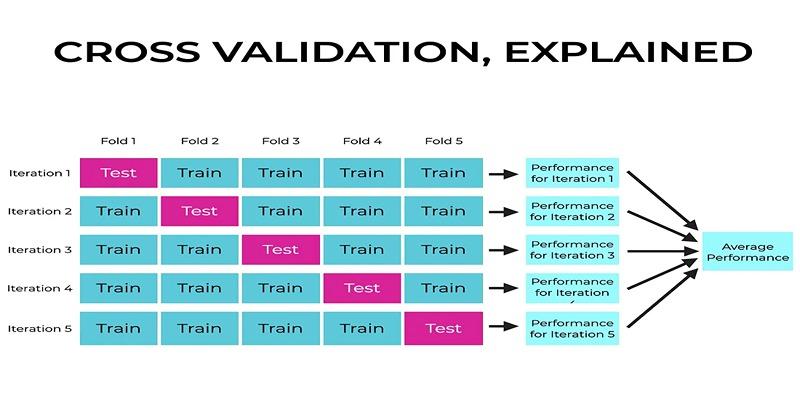

Bias is another concern. If a model is trained on past decisions that included human bias—conscious or not—it can replicate or even amplify those patterns. This is especially risky in claim denial scenarios. A well-designed AI pipeline will include steps for auditing decisions and flagging disparities. Some insurers now use synthetic data or counterfactual examples during training to reduce these risks.

Importantly, these safeguards need to be baked into the deployment process, not added after the fact. The challenge isn’t just technical; it’s organizational. Teams have to agree on fairness benchmarks, audit frequency, and how to act on what they find. Otherwise, the system becomes opaque and hard to correct.

Building for Reliability, Not Just Speed

One of the promises of AI in insurance is faster turnaround—shorter claims cycles, quicker responses. But speed alone doesn’t guarantee better outcomes. What matters more is reliability. Customers want clear updates, accurate decisions, and timely resolution.

That puts pressure on insurers to balance performance with system stability. Models used in customer-facing roles must have tight guardrails. If a claim summary is generated, there should be a confidence score tied to it. If a next-best-action is suggested to an agent, it should be traceable—based on past cases, current inputs, or business rules.

Infrastructure matters too. Inference latency becomes a bottleneck when models scale. If every customer request has to hit a heavy cloud model, the experience will lag. Some companies have started using smaller distilled models for front-end tasks while offloading deeper analysis to larger systems running in the background. Others are experimenting with hybrid pipelines that trigger model calls only when simpler heuristics fall short.

And then there’s documentation. Insurance decisions can be challenged in court. If AI played a role in a denial, there must be a transparent record of how that conclusion was reached. That requires consistent logging, reproducibility, and a clear policy on human override. Without these, the risk of litigation—or regulatory pushback—increases.

Conclusion

Insurance doesn't need more apps or flashy tools. It needs systems that reduce the friction customers face when filing claims or seeking help. AI isn't a magic fix, but it can simplify processes, speed up resolutions, and improve consistency. The value lies in solid integrations, ongoing monitoring, and focusing on reliability over trendiness. Insurers that invest in this approach may face fewer complaints and build more trust. The technology is ready. The real question is whether companies will use it with the depth and discipline it demands.