Generative AI has immense potential, but its knowledge is limited to its training data. Retrieval-Augmented Generation (RAG) overcomes this by connecting large language models (LLMs) to external data for more accurate, context-aware responses. Building a reliable RAG system is challenging, and effective evaluation is crucial to move from a basic prototype to a powerful, enterprise-grade AI solution.

What is a RAG System?

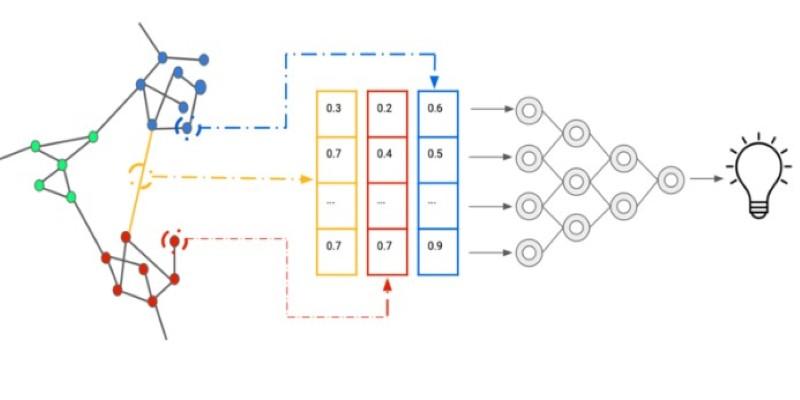

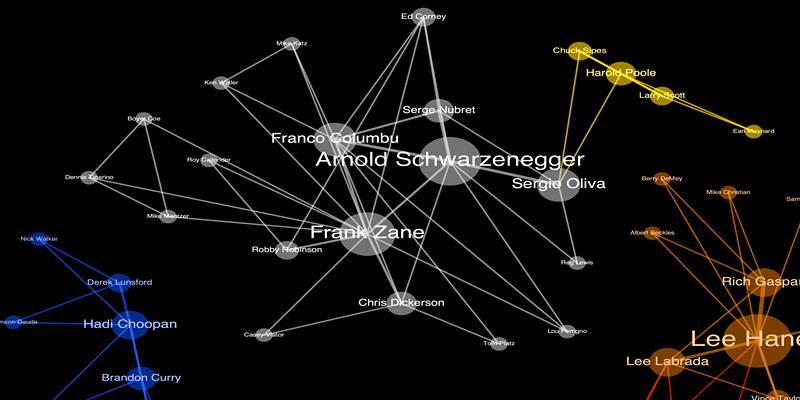

A RAG system is a form of AI architecture that provides more functionality to a large language model (LLM). It operates by linking LLM to the outside knowledge base, e.g. internal documents of a company, a database or live internet.

Whenever a user makes a query, the RAG system will first access information that is relevant on this knowledge base. It then transmits this retrieved information together with the query to the LLM. It is against this backdrop that the LLM draws up a holistic and precise response. This will enable the model to give answers that are not just derived out of its prior trained knowledge but also grounded on particular, timely, and proprietary data.

The overall advantage of RAG is that it serves to correct the most frequent problems of LLM such as hallucinating facts (creating things which are not real) and giving out of date information. As a credible source of truth, RAG helps make AI applications more reliable and practical by basing the responses of the LLM on the latter.

Why is RAG Evaluation So Important?

The construction of a RAG pipeline is a system that features many integrating components: the retriever, reranker, context engine, and generator (LLM). All these components may fail. Any weakness in each part of the chain may result in low quality responses, loss of user confidence and deletes the value of the application.

Such weak links could be only eliminated with the help of proper assessment. It helps you:

- Measure Performance Objectively: Instead of relying on gut feelings, evaluation provides concrete data on how well your system is performing.

- Identify Bottlenecks: Is the retriever failing to find the right documents? Is the LLM misinterpreting the context? A structured evaluation process helps pinpoint exactly where the system is breaking down.

- Build User Trust: Users need to be confident that the answers they receive are accurate and reliable. Demonstrating that your system has been rigorously tested and optimized is crucial for adoption.

- Optimize for Better Results: Once you identify weaknesses, you can experiment with different components, settings, and models to improve performance. Evaluation guides this iterative process, ensuring your changes lead to real improvements.

In the absence of evaluation, there is a risk of introducing a product which is unreliable, gets the information wrong and does not meet the expectations of the users.

Key Metrics for Evaluating RAG Systems

To assess a RAG system, an assessment criterion must be the process of retrieval and also the output generated. The assessment can be decomposed into several major areas and each of them has its own measures.

Context Retrieval Metrics

Relevant context is the first stage of a RAG pipeline. The wrong information will be pulled and the ultimate answer will be defective, regardless of the potency of the LLM. This stage is measured on two fundamental scales (Context Precision and Context Recall).

Context Precision

This measure is used to measure the relevance of the retrieved documents. Of the entirety of the documents that the retriever retrieves, what proportion are of use in addressing the query put in by the user? When the precision score is high, then the system is good at locating signal and disregarding noise. When the score is low, it means that the retriever is recalling much irrelevant information, and this may confuse the LLM.

Context Recall

This metric measures whether the retriever found all the relevant information available in the knowledge base. Did it miss any crucial documents? A high recall score is important for ensuring the final answer is comprehensive. Low recall means important context is being left out, leading to incomplete or misleading answers.

Generated Answer Metrics

After retrieving the context, the LLM uses it to come up with an answer. In this case, you have to measure the end product quality. Faithfulness, Answer Relevance and Answer Correctness are the three most important metrics.

Faithfulness

This is a quality that determines that the answer that has been generated is factually consistent with the context given. Is there information being made up by the LLM or is the LLM hallucinating? An honest response confines itself to facts contained in documents that have been retrieved. A poor score in faithfulness is a big marker since it implies the system is producing fake information.

Answer Relevance

It is a measure of the quality of the generated answer answering the initial query of the user. Does the answer go to the point? At times, a factual answer may be true, and true to the situation, yet not simply answering the question the user posed. A high level of answer relevancy makes the system look really useful.

Answer Correctness

This is the final quality measure. It is the conjunctions of being faithful and relevant to answer the question whether, as a matter of fact, the answer is right. This metric may need some ground truth or a human verified proper answer to compare to. Although it is harder to measure automatically, it is the most significant measure of the overall performance of the system.

How to Set Up a RAG Evaluation Framework

Now that you know the key metrics, how do you go about implementing an evaluation framework? Here is a simplified, step-by-step approach:

Create an Evaluation Dataset

You need a set of questions and, ideally, human-verified "golden" answers. This dataset should cover a wide range of topics and question types that your users are likely to ask. This dataset will be your benchmark for measuring performance.

Use LLM-as-Judge

Manually checking every single output is not scalable. A powerful technique is to use another, more advanced LLM (like GPT-4) as a "judge." You can use carefully designed prompts to ask the judge LLM to score the RAG system's outputs on metrics like faithfulness and answer relevance. For example, you would provide the judge with the generated answer and the source context and ask, "Is the generated answer fully supported by the provided context? Answer with a simple 'yes' or 'no'."

Run Experiments and Track Results

Once your evaluation pipeline is in place, you can start experimenting. Swap out the retriever model, try a different LLM for generation, or adjust the number of documents retrieved. After each change, run your evaluation dataset through the system and compare the metric scores.

Analyze and Iterate

Look at the results to identify patterns. Are you seeing low context recall? Maybe you need a better embedding model. Is faithfulness an issue? You might need to tweak your prompt to the generator LLM to be more strict. This data-driven approach allows you to systematically improve your application.

Conclusion

Building a high-performing RAG application demands continuous improvement and rigorous evaluation. This is the next step after prototypes to convert it into a trustworthy, enterprise scale tool. Concentrating on such important metrics as context precision, recall, faithfulness and relevance of answers provides the insights to the issues to diagnose and optimize performance. This quality commitment will create user trust and success in the long run. Analysis is the thing that takes a good RAG system to the great heights.